Recently, the National Highway Traffic Safety Administration (NHTSA) announced that it has begun a formal investigation into Tesla's Autopilot driving system, due to several car crash accidents between Tesla cars and parked emergency vehicles. It involves 765,000 Tesla vehicles since 2014, 1 death and 17 injuries are confirmed.

This might be a good opportunity for us to calm down in this trendy heat wave of so-called “auto driving” and take a minute to look deeper into its basic principles -- advanced driving assistance systems. It’s not being conservative to question why those advanced cars are always crashing into parked cars, it’s also concerning people’s lives.

Doppler's still objectives trap

Surprisingly the biggest blindpoint of automatic cars is the still objectives and here’s the reason behind it.

The ACC (adaptive cruise control) system is the initial stage of automatic cars, and the vehicle-mounted millimeter-wave radar was used. We all know how radar works, we calculate the distance by the time the emitted radio waves bounces back when it hits an obstacle, and compare it to the speed of light. This is obviously not accurate enough, and a lag exists, hence the speed is not obtained in real time, which is obviously not sufficient for autopilot.

The Doppler Effect: an increase (or decrease) in the frequency of sound, light, or other waves as the source and observer move toward (or away from) each other. The effect causes the sudden change in pitch noticeable in a passing siren, as well as the redshift seen by astronomers.

It is to say, Objects that move towards us and objects that move away from us, the wavelength and frequency of the reflected electromagnetic waves are different compared to objects that are relatively still to us.

By measuring the direction of change and the value of the change, we can get the relative speed of an object that’s approaching/away from us. Because our own speed is usually easy to know, we can get the speed of the object by simple calculation.

More importantly that the speed measured by the Doppler Effect can be regarded as real-time speed, instead of measuring twice and dividing the distance by time as in the past, it is much more accurate and without delay, which is much suitable for the auto driving and driver assistance systems.Doppler Effect is exactly what the vehicle-mounted millimeter-wave radar that we are familiar with today uses to obtain speed information of vehicles on the road.

However, the Doppler Effect has its own problem. It sees all the still objectives as a part of the background, ignoring that they have waves too.

That is exactly how those accidents happened, those automatic cars detected these still objectives as background instead of obstacles. And that’s also why many manuals of automatic cars write: there is a risk that this automatic car will not be able to stop if the car in front of you is in slow speed or stationary.

The difference between millimeter-wave radar sensor’s vision and visual recognition

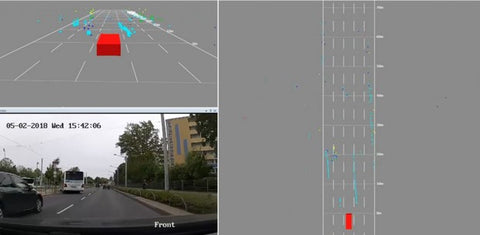

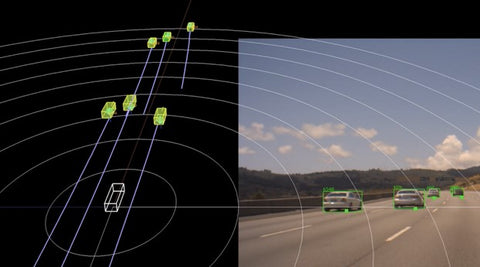

In the eyes of cars(radar sensors), the world is undoubtedly different from ours. The millimeter-wave radar sensors uses the Doppler effect to identify the objects in movement (their speed and velocity), while the still objects are still hard to identify, that’s because the world in radar’s eyes is like the picture below:

Which is quite different to what we thought it was as the picture shows in below:

At the moment, the millimeter-wave radar sensors that are in use are still unable to visualize the detected objects and are unable to detect objects in high altitude.Which is to say, a sign on the road and a parked car make no difference to the millimeter-wave radar sensors, and for avoiding this recognition error, millimeter-wave radars usually simply just abandon the detection of stationary and low-speed objects, let visual recognition system take the task.

Advanced driving assistance systems equipped with millimeter wave radar usually have a visual recognition system (camera) at the same time. However, the visual recognition system does not have 100% recognition reliability currently: the camera may be blocked and contaminated, depending on each algorithm, it may not be able to recognize all objects (such as stationary vehicles) that appear on the road.

Visual recognition system is highly dependent on the machine’s learning process and the recognition algorithm. Although the recognition ability keeps improving, there are always some situations that have not been covered at the moment. At the same time, visual recognition is also easily affected by the weather, dust, etc. These all caused the insufficient development of the usage of visual recognition systems.

In a word, no automatic cars in the market are 100% confident in themselves, there are too many situations that may appear on the road which the car can not cope with yet. This is not a technical problem at all-otherwise Level 2 won’t require the driver to always pay attention. It is just a publication to attract people, to persuade the era of auto driving is here.